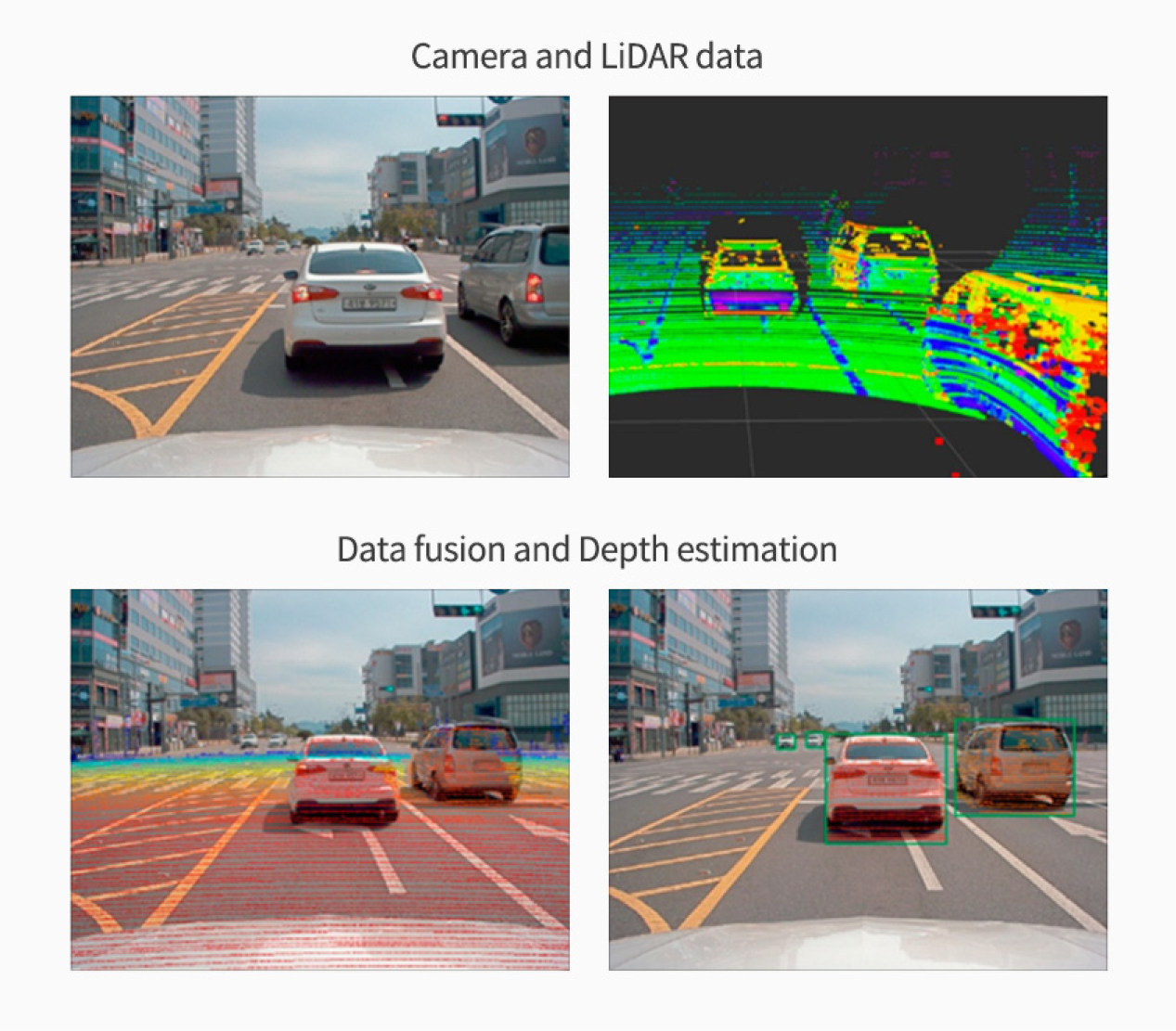

In order to realize fully autonomous driving, a sensor capable of recognizing three-dimensional space is required. Since implementing 3D vision using a 2D-based camera requires complex calculations, a LiDAR sensor capable of 3D spatial recognition is currently used. LiDAR recognizes three-dimensional space by detecting a 360-degree laser that is reflected and returned back. Numerous laser points are clustered to express the coordinates of the X, Y, and Z axes. The set of points expressed in that way is called a 3D point cloud.

Since the data of the 3D point cloud is calculated by the 3D deep learning method of the X, Y, and Z axes, it requires more calculations compared to deep learning of 2D data of the X and Y axes, like a camera. And, since autonomous driving is directly related to driver safety, fast processing of collected data is essential. Therefore, a lightweight deep learning algorithm that processes LiDAR signals expressed in 3D point clouds at high speed was selected as the first task for collaborative research.

Also, as an edge device, a car should be able to be used independently even when not connected to a server. Due to limited physical space, available hardware resources are limited, and in order to overcome these environmental constraints, it is also important to optimize the hardware design. Therefore, along with research on efficient algorithms, we are researching and selecting hardware optimization designs as the second task of our collaborative research.

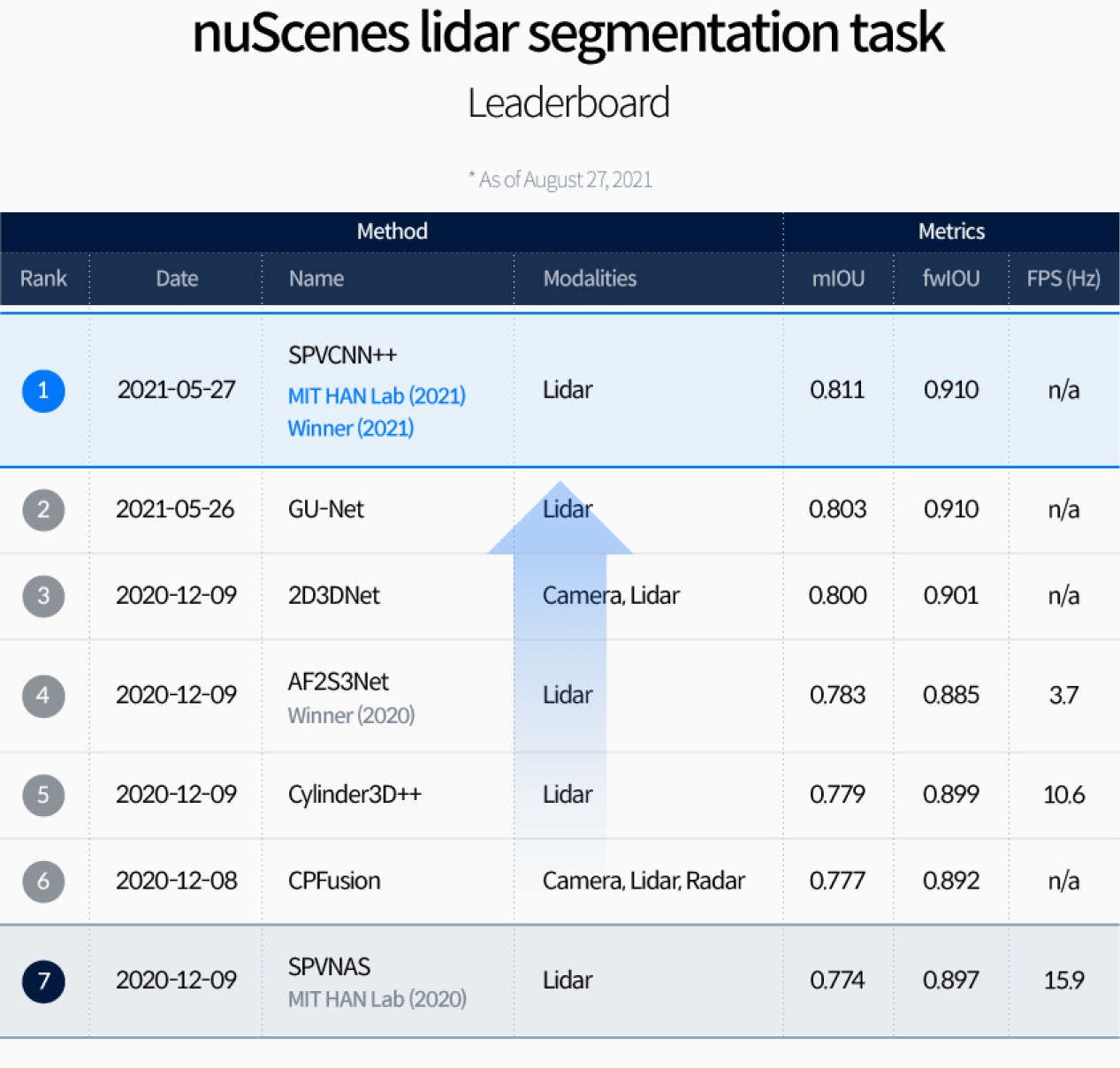

The AI Driving Olympic (AI-DO) is a global competition representing AI algorithms related to autonomous driving. Well-known global companies such as Google, Huawei, and Baidu, as well as famous universities such as MIT, Stanford University, and Nanyang Technological University (Singapore) participate in this competition and compete with one another. Through this contest using the NuScenes dataset, contest participants are actively conducting research in the field of autonomous driving by validating and improving algorithms.

In the above competition, the deep learning algorithm submitted by MIT HAN Lab took first place in the NuScenes LiDAR Segmentation Challenge at the 6th AI Driving Olympics.

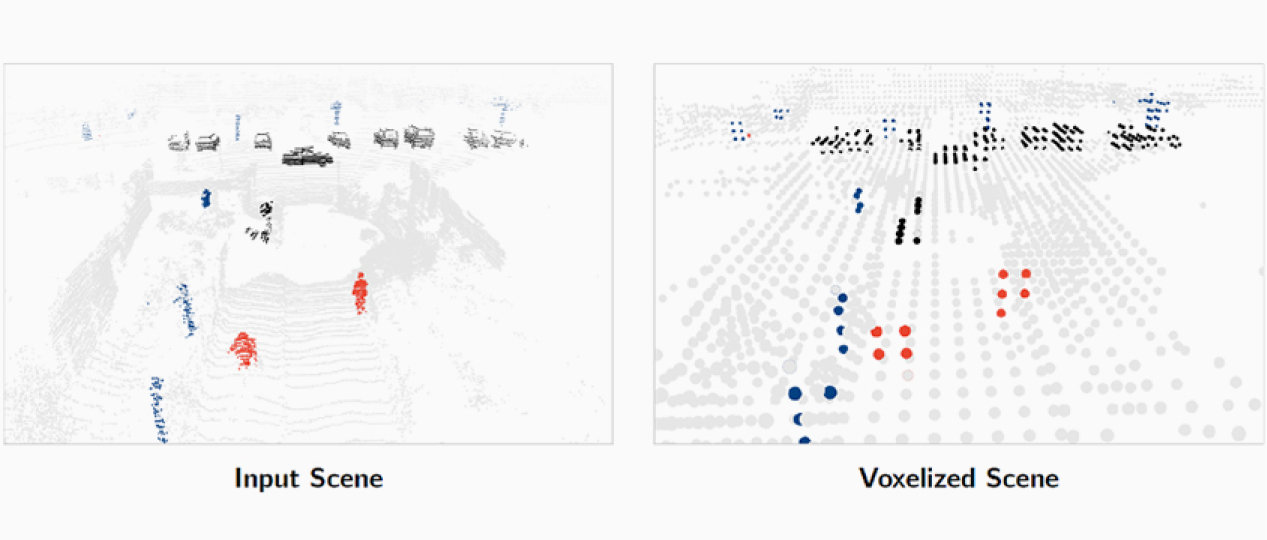

Existing studies have uniformly gridded the point cloud obtained through LiDAR in units of voxel (a group of graphic information that defines a point in 3D space), and then extracted the information of points in the voxel using 3D volumetric convolution techniques. In this case, if the point cloud has a low resolution, several points are merged into a single point, and information loss may occur. In cars, this can lead to safety concerns. Therefore, a high-resolution point cloud is required to reduce information loss, but as the resolution of the voxel increases, the computation cost and memory size requirements increase cubically, so this method is also not easy to use in cars.

Recently, in order to solve this problem, an algorithm that directly extracts information without voxelizing the points constituting the point cloud has appeared. However, the random memory access pattern occurred due to the point cloud being highly sparse, and eventually, the overall throughput of the hardware was reduced, which made it difficult to apply it in practice.

In this research project, an algorithm was developed with the goal of applying only the strengths of each algorithm to solve these problems. As a result, an excellent mechanism was recognized, which resulted in good results at the 6th AI Driving Olympics.

We had an interview with the project manager, Hee-yeon Nah, and Professor Song Han on joint research between Hyundai Motor Group and MIT HAN Lab, which has achieved excellent results. Details that have not been included can be found in the interview.

Q. How did Hyundai Motor Group and MIT come to do joint cooperation research?

Professor Song Han | We found a common interest in efficient AI and AI hardware for automotive applications and then started the research.

Q. Please briefly introduce the field of research you are working on with the Hyundai Motor Group.

Professor Song Han | My lab has been working on efficient algorithms, systems, and hardware for deep learning. We are improving the computational efficiency of perception for automotive applications.

Hee-yeon Nah, Senior Researcher | Through joint research with MIT, we are researching an algorithm that can efficiently detect an object using a point cloud and a hardware design that can effectively accelerate this algorithm.

Q. What is the purpose and achievement of the joint cooperation research?

Professor Song Han | My lab develops efficient algorithms, systems, and hardware to enable real-time 3D LiDAR perception under resource- constrained hardware platforms. My students have developed an efficient 3D neural network algorithm (SPVCNN), a highly-optimized 3D inference engine (TorchSparse), and a specialized 3D hardware accelerator (PointAcc), leading to several publications in the top- tier conferences in both the deep learning community and the computer architecture community, including NeurIPS, ECCV, ICRA, IROS, and MICRO. Furthermore, my lab has deployed our proposed solution onto the autonomous racing car of MIT Driverless and a full-scale autonomous vehicle.

Hee-yeon Nah, Senior Researcher | Also, the key achievement of the algorithm developed through this study was that it beat out the competitors and won first place in the 6th AI Driving Olympics jointly hosted by Motional and ICRA’21.

Q. What are the characteristics of the SPVCNN and what improvements were made compared to the existing algorithms?

Hee-yeon Nah, Senior Researcher | Research on lightening deep learning can be divided into ‘Lightweight Deep Learning Algorithm Research’, which maximizes efficiency compared to existing models by designing the algorithm itself with fewer calculations and an efficient structure, and ‘Model Compression’, which reduces parameters of an already-created model. In this collaborative project with MIT, the former approach was able to achieve both optimization and weight reduction of the algorithm.

Professor Song Han | SPVCNN provides an automated solution to design the best 3D neural network architecture under a given hardware constraint (e.g., computation, latency, and energy). It also takes advantage of rich unlabeled data (9x more than the labeled data in the nuScenes dataset) to improve the data efficiency. “With the help of our optimized inference engine, SPVCNN can run at real-time (12.8 FPS) on a single NVIDIA GTX 1080Ti GPU, while achieving the state-of-the-art accuracy (more than 81% mIoU on the NuScenes dataset)” says PhD students Zhijian Liu and Haotian Tang from our lab. As a result, our solution ranks first in the NuScenes LiDAR segmentation challenge this year, outperforming all other industry solutions including Google and Huawei.

Q. Why is the lightening of deep learning models important in autonomous driving and robots field?

Professor Song Han | Deep learning models provide a significant performance improvement over conventional algorithms in the field of autonomous driving and robotics, and the accuracy grows with the amount of training data. Furthermore, with the support of optimized inference engines and specialized hardware, these models can be deployed efficiently in real-world applications, enabling fast and accurate perception and planning.

Q. What does it mean to win first place in the NuScense LiDAR Segmentation Challenge at the 6th AI Driving Olympics?

Professor Song Han | Winning first place in the NuScenes LiDAR segmentation challenge demonstrates our technology leadership in efficient LiDAR perception for autonomous driving. Our efforts on designing hardware-aware 3D models and learning from unlabeled data is pioneering for real-world autonomous driving applications.

Q. Why is the lightening of deep learning models important in autonomous driving and robotics?

Hee-yeon Nah, Senior Researcher | The study of lightweight deep learning is essential to embed the cloud-based trained model into the edge device. Various effects can be achieved, such as reducing battery consumption, computation, latency, and network traffic. Optimization work for this must also be done.

Q. Please introduce the study on hardware architecture, which is another subject of the joint cooperation research.

Professor Song Han | Point clouds are highly sparse. However, sparsity is not friendly to the general-purpose hardware. To this end, we have developed a specialized hardware accelerator (PointAcc) to accelerate these sparse operations, including finding the sparse neighbors and gathering their features. “PointAcc incorporates a configurable sorter-based sparse mapping unit that efficiently supports flexible types of sparse mapping operations. It is also equipped with a specialized data orchestration unit that exploits layer fusion and caching, effectively reducing the DRAM access by 6.3x,” says PhD student Yujun Lin. As a result, the PointAcc accelerator achieves 3.7x, 79x speedups and 21x, 268x energy savings over GPU and TPU. PointAcc was recently accepted by MICRO 2021, a top-tier computer architecture conference.

Hee-yeon Nah, Senior Researcher | To explain further, point cloud data has a very low density, unlike 2D image data with high density. Data does not exist for all (X, Y, Z) coordinates. Some points are concentrated in some parts of space, and some points do not exist at all in other parts of space. Therefore, if the method of applying the convolution operation to 2D images is applied to the point cloud as it is, there is a lot of wasted operation. It is necessary to study the algorithm that selectively calculates only where there are actual points and the hardware that efficiently supports this operation method.

Q. Are you planning to continue the joint cooperation research with Hyundai Motor Group? If so, what subject are you planning to research?

Professor Song Han | We are planning to continue the cooperation research with the Hyundai Motor Group. We will focus on co-designing neural networks and inference engines for auto driving perception, including the full stack design from algorithms to accelerator prototypes. We aim for high-accuracy, light-weight models running on efficient hardware architecture, making AI faster, greener and more accessible to everyone.

Q. Do you have any advice for students who are interested in AI and autonomous driving?

Professor Song Han | This is a golden age for efficient deep learning research, and there’s a vast opportunity to make a real-world impact. I would encourage finding real-world problems by collaboration with industry partners, and aiming for ambitious moonshots on interdisciplinary topics. Welcome to join our efforts.

This has been an introduction on Hyundai Motor Group’s joint research with MIT Professor Song Han and its achievements. For the realization of safe autonomous driving, we plan to continue our research on the model compression and hardware optimization of deep learning algorithms. In addition, Hyundai Motor Group is conducting various forms of cooperative research with excellent external organizations. We ask for your interest and support so that we can continue our in-depth research with the best partner.

Sang-hoon Shin, Senior Researcher, Hyundai Motor Group

I am in charge of applying new technologies to automobiles and discovering new business opportunities.

Hee-yeon Nah, Senior Researcher, Hyundai Motor Group

I am in charge of research and development in the field of computer vision using deep learning.

Professor Song Han, MIT

I have been researching efficient algorithms, systems, and hardware for deep learning. Recently, I am interested in improving the cognitive performance and efficiency of autonomous vehicles.